Research & discovery Technologies

Improved Data to Support Decision Making

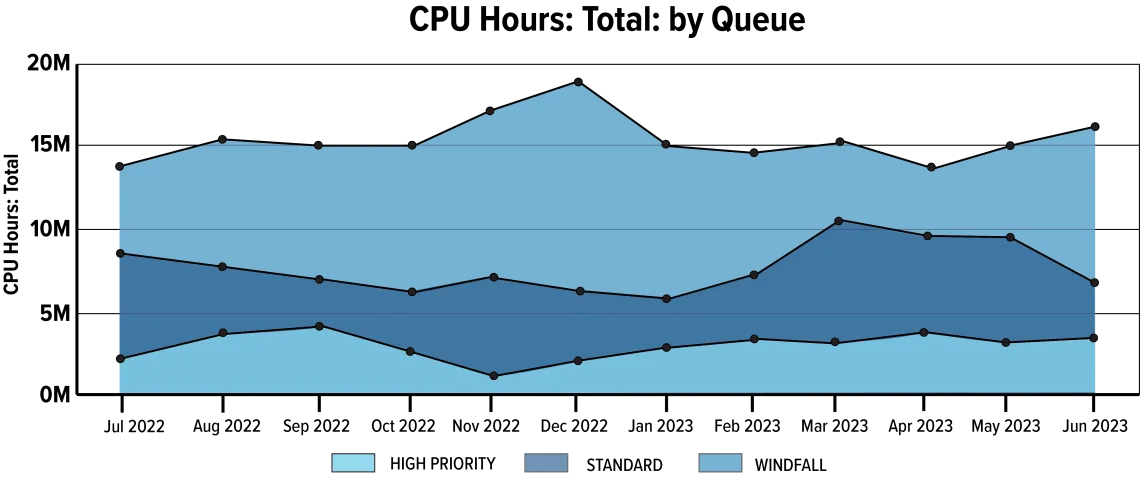

The CIO Division’s Research & Discovery department provides a high performance computing (HPC) resources to research faculty at no cost. With allocated time and windfall capacity, these resources operate at 100% capacity, ensuring research faculty have access to extensive processing capabilities.

As a leading research institution, the University of Arizona acquires supercomputing clusters with diverse hardware configurations, tailored to the specific computational needs expressed by faculty for their research. This may involve significant parallel processing, high-throughput processing, or the specialized graphical processing required for research data visualization. The University of Arizona makes this computing capacity available 24/7, 365 days a year.

Faculty members are allocated around 40,000 compute hours per month. Any unused allocations contribute to an excess of computing power. This surplus, or windfall, is then harnessed to support research activities through an efficient queuing mechanism that manages unutilized processing time. This ongoing queuing of jobs known as windfall guarantees that the various clusters consistently operate at 100% utilization, providing support for faculty allocations and standing queue jobs at all hours. A specific faculty research case exemplifies the significant value provided by the windfall service.

Mihailo Martinović, PhD, Researcher and Scientist with the College of Science Lunar & Planetary Laboratory, employs HPC to study solar wind.

While distant objects in astrophysics are observed using various telescopes based on Earth and in space, the stream of particles our Sun emits – the solar wind – provides us with a unique opportunity to probe space environment in situ, or in its original place, examining a medium that is too close to vacuum to be reproduced in a laboratory setting. Using spacecraft as probes in a plasma that moves hundreds of thousands of kilometers per second is a process that started mid 20th century and continues to this day with a fleet of several former and current missions, mostly built by NASA and ESA.

During the 2023 academic year, almost 6 million compute jobs were executed, consuming a total of 321,783,285 CPU hours.

One CPU hour corresponds to the work of one core in use for one hour.

Each researcher gets 100,000 GPU hours each month on Puma and 70,000 on Ocelote. The 100,000 hours equates to using about 1 ½ compute nodes 24 / 7. The high priority partition is for those researchers who contribute their research funds to buy more compute nodes. They get an allocation on top of the standard amount equivalent to the funding amount they contributed.

These observations offer hundreds of millions of “snapshots” into this one-of-a-kind plasma laboratory of interplanetary space. As the volume of available data grew exponentially, continuous surveys of missions became very computationally expensive and now require millions of CPU hours.

The university’s HPC option to use windfall hours made these computations possible, fostering two large projects. First, the solar wind plasma density and temperature can be very accurately measured from observed interplanetary fluctuating electric field. This process requires several minutes of computational time per observed electric field spectrum, which are present every 4.4 seconds over the 30-year long lifetime of NASA’s Wind mission, amounting to ~200 million observations.

Second, very sophisticated particle analyzers can detect individual plasma particles. From these measurements, the existence of electromagnetic waves, that are believed to govern the dynamics of the solar system, can be inferred. This estimate uses a mathematically complicated framework of linear wave theory and requires hours of CPU time to process each measurement. Using HPC resources, almost completely via the windfall queue, Dr. Martinović and his team processed over 5 million intervals from various missions. The resulting massive dataset was then used to train Stability Analysis Vitalizing Instability Classification (SAVIC), a user-friendly machine learning algorithm capable of further processing millions of observations in seconds. This code immensely increased accessibility of the plasma stability framework to a wider community, as it is publicly available in the form of a Python package and does not require expertise in plasma instabilities to be used to its full extent.

ARIZONA RESEARCH

2,753 invention disclosures knowledge development that can change the world

537 licenses & options for university inventions

615 patents issued. Ranked no. 28 among worldwide universities granted U.S. utility patents

135 startups launched commercializing UArizona inventions

Source: National Science Foundation Higher Education Research and Development Survey

FY23 Metrics

RESEARCH DATA CENTER USAGE

Principal Investigators (PIs) Using HPC Systems

545

Active Awards Using HPC Systems

1.3K

Active Root Awards Using HPC Systems

2K

Total Sponsored Research Expenditures by Investigators Using HPC Services

$386M

Top 100 PIs Using HPC

79%

SUPERCOMPUTING CAPACITY

Total Cores of All HPC Systems

43.8K

Monthly Faculty Compute Allocation

177K hrs. / mo.

Yearly Faculty Compute Allocation

2.12M hrs.

SERVICES

- Supercomputing(HPC)

- Regulated Research Environment

- Research Support Services

- UAVITAE